Photo by Kayla Velasquez on Unsplash

Unveiling dEFEND Study: How Explainable Fake News Detection Works

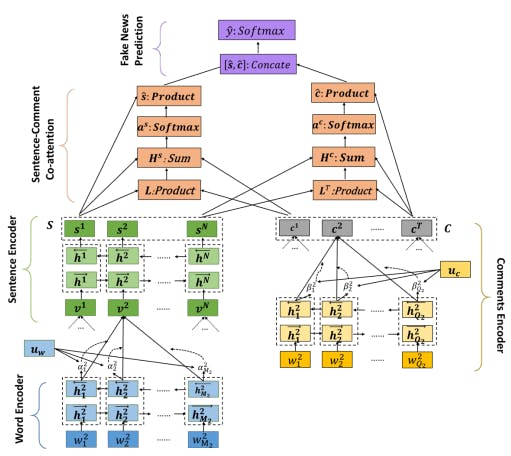

Components

The architecture consists of four components.

(1) News content encoder (word encoder and sentence encoder)

The news content encoder consists of two parts.

Word encoder: In an RNN, the memory tends to diminish as the sentence length increases. Therefore, Gated Recurrent Units (GRU) are employed to preserve long-term dependencies. Bidirectional GRUs are utilized to grasp contextual information. Subsequently, an attention mechanism is implemented to assess the significance of each word in the sentence, generating the sentence vector v.

Sentence encoder: Bidirectional GRUs are employed to capture contextual information at the sentence level based on the learned sentence vector v.

(2) User comment encoder

Similar to the news content encoder, the user comment encoder works by using a bidirectional GRU and attention mechanism to learn the latent representation of each word and compute a comment vector.

(3) Sentence-comment co-attention

The sentence and comment co-attention is utilized to grasp the semantic connections between the sentences and comments. This enables the model to better comprehend the interactions between the news content and user comments, resulting in a more precise classification of the news content.

(4) Fake news predictions

This final step involves combining the two vectors calculated by the co-attention layer. These combined vectors are then forwarded to the softmax layer for the ultimate prediction process. By integrating these vectors, the model can make more accurate predictions based on the semantic relationships identified between the sentences and comments, enhancing the overall classification of news content.

Note: The diagram is taken from the research paper itself for explanatory purposes.

References

Shu, Kai & Cui, Limeng & Wang, Suhang & Lee, Dongwon & Liu, Huan. (2019). dEFEND: Explainable Fake News Detection. 10.1145/3292500.3330935.